Projects

Machine Learning, Computer Vision (and Language)

At Yahoo I work primarily on machine learning research for vision and/or natural language applications. Some of this work leads to research publications, which are described here.

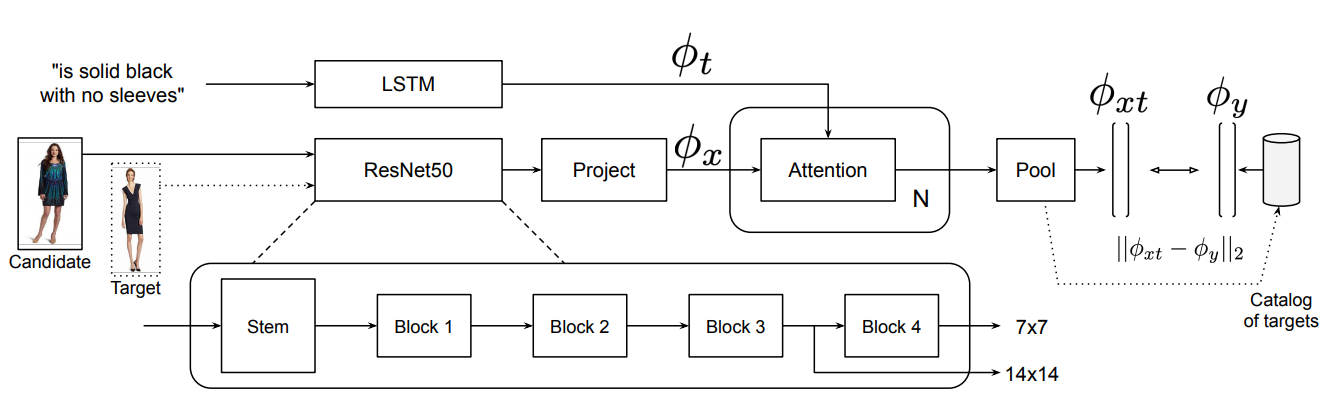

Modality-Agnostic Attention Fusion for visual search with text feedback

(paper) Eric Dodds, Jack Culpepper, Simao Herdade, Yang Zhang, Kofi Boakye

(paper) Eric Dodds, Jack Culpepper, Simao Herdade, Yang Zhang, Kofi Boakye

Visual search with text feedback provided an interesting (and potentially useful to Yahoo) testbed for combining signals from multiple modalities using dot-product attention. We found the technique effective once we got the details right, and we found some interesting and surprising phenomena in the text-image attention maps. We followed up this work with a study of pretraining and evaluation methods for this task, which is under review for publication (as of September 2021).

Learning Embeddings for Product Visual Search with Triplet Loss and Online Sampling

During my internship at Yahoo (then Verizon Media) I applied online sampling techniques for the triplet loss and found some other tricks to train state-of-the-art (at the time) models for visual search. Business applications have focused on matching products with content for commerce and advertisement. We also posted a technical report on the work to arXiv.

During my internship at Yahoo (then Verizon Media) I applied online sampling techniques for the triplet loss and found some other tricks to train state-of-the-art (at the time) models for visual search. Business applications have focused on matching products with content for commerce and advertisement. We also posted a technical report on the work to arXiv.

PhD: Theoretical Neuroscience / Physics

I completed my PhD in the physics department at UC Berkeley, but my thesis work under Mike DeWeese at the Redwood Center for Theoretical Neuroscience was only “physics” in the spirit of its quantitative methods. My PhD dissertation can be found here and several major projects are described below.

Spatial whitening in the retina may be necessary for V1 to learn a sparse representation of natural scenes

(paper) Eric McVoy Dodds, Jesse Alexander Livezey, Michael Robert DeWeese (in preparation)

(paper) Eric McVoy Dodds, Jesse Alexander Livezey, Michael Robert DeWeese (in preparation)

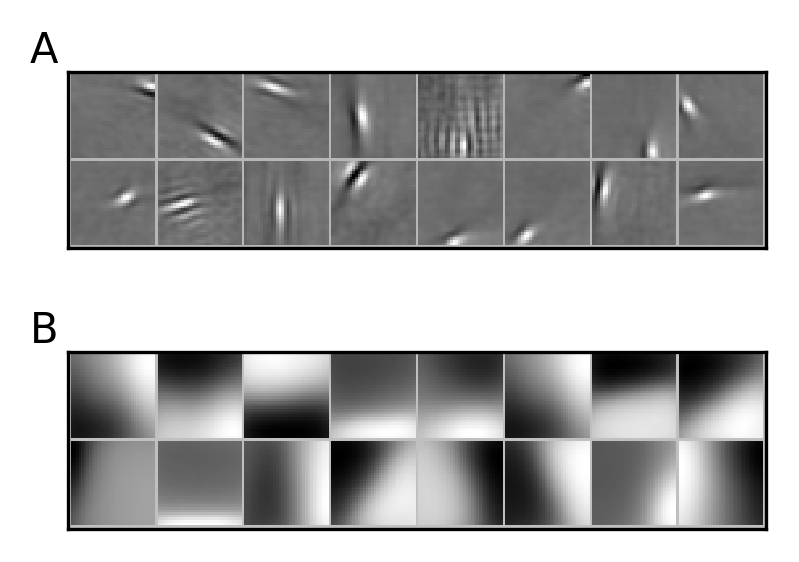

Atick and Redlich showed that the receptive fields of retinal ganglion cells are consistent with these cells whitening their inputs from photoreceptors (while suppressing high-frequency noise). They suggested that such a representation might be a step towards a representation in terms of statistically independent atoms. We suggest that this particular step may be taken because it is a necessary prerequisite for learning in cortex, regardless of the other benefits of a representation without pairwise correlations.

A successful and well-studied model of primary visual cortex (V1) simple cell function posits that V1 is optimized for sparse representations of natural scenes. Zylberberg and colleagues showed that this optimization could be (at least approximately) achieved using synaptically local learning mechanisms. That is, each synapse only needs access to its own current strength and the activity of the neurons that meet at that synapse to update its strength to improve the optimization. We show that these synaptically local mechanisms only drive the model to recover the sparse features of the data and form good sparse representations when the data is whitened before being input to the network.

Data is white in this sense if all directions have the same variance (the covariance matrix is proportional to the identity matrix); whitening is also known as sphering. Intuitively, a neuron that doesn’t have access to other neurons’ contributions to the network’s representation will prefer the high-variance directions where its own contribution will be biggest. If the data is close enough to white, synaptically local mechanisms that decorrelate neurons’ activities using lateral inhibition can deal with this problem. But raw natural images are too far from white for this to work.

Sparse structure of natural sounds and natural images

(paper) Eric McVoy Dodds, Michael Robert DeWeese

(paper) Eric McVoy Dodds, Michael Robert DeWeese

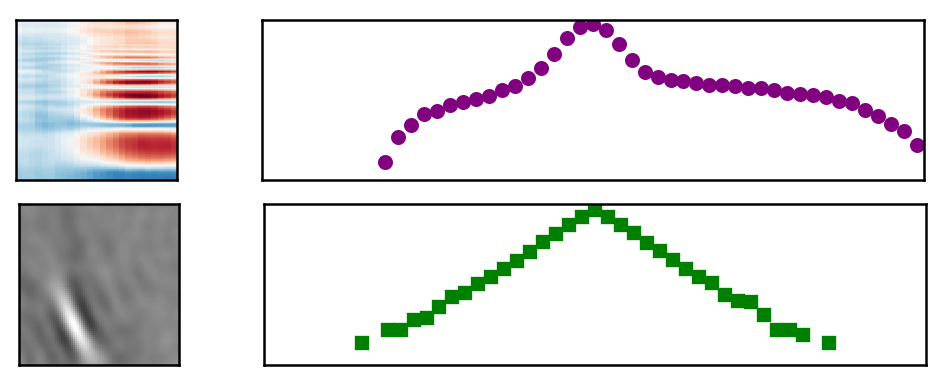

We report on differences and similarities in the structure of sparse linear components of sets of natural sounds and natural images, and we show consequences of the differences for a neurally plausible model.

Since Olshausen and Field showed that a network optimized to form sparse codes of natural image patches accounted for the receptive fields of simple cells in mammalian primary visual cortex, many studies have improved our understanding of sparse coding and the visual system. There has also been some successful work in the auditory domain, though the story there is less clear. It’s interetesting that the principle of sparse coding seems to apply across multiple sensory modalities. My work with Mike is, as far as I know, the first to look at the sparse structure of data from two modalities side-by-side to better understand the universality of this principle and what differs between modalities. We also show that differences we observe in sparse coding of natural sounds have implications for models with biological constraints.

My TensorFlow implementation of the “locally competitve” sparse coding algorithm used in this work is here, and my python implementation of Zylberberg’s “Sparse and Independent Local network” is here.

We have submitted this work to a journal and hope to see it published soon!

Causal efficient auditory coding

Course project, Fall 2016

My code for this project is here and I implemented a (near) copy of Smith and Lewicki’s method in TensorFlow here.

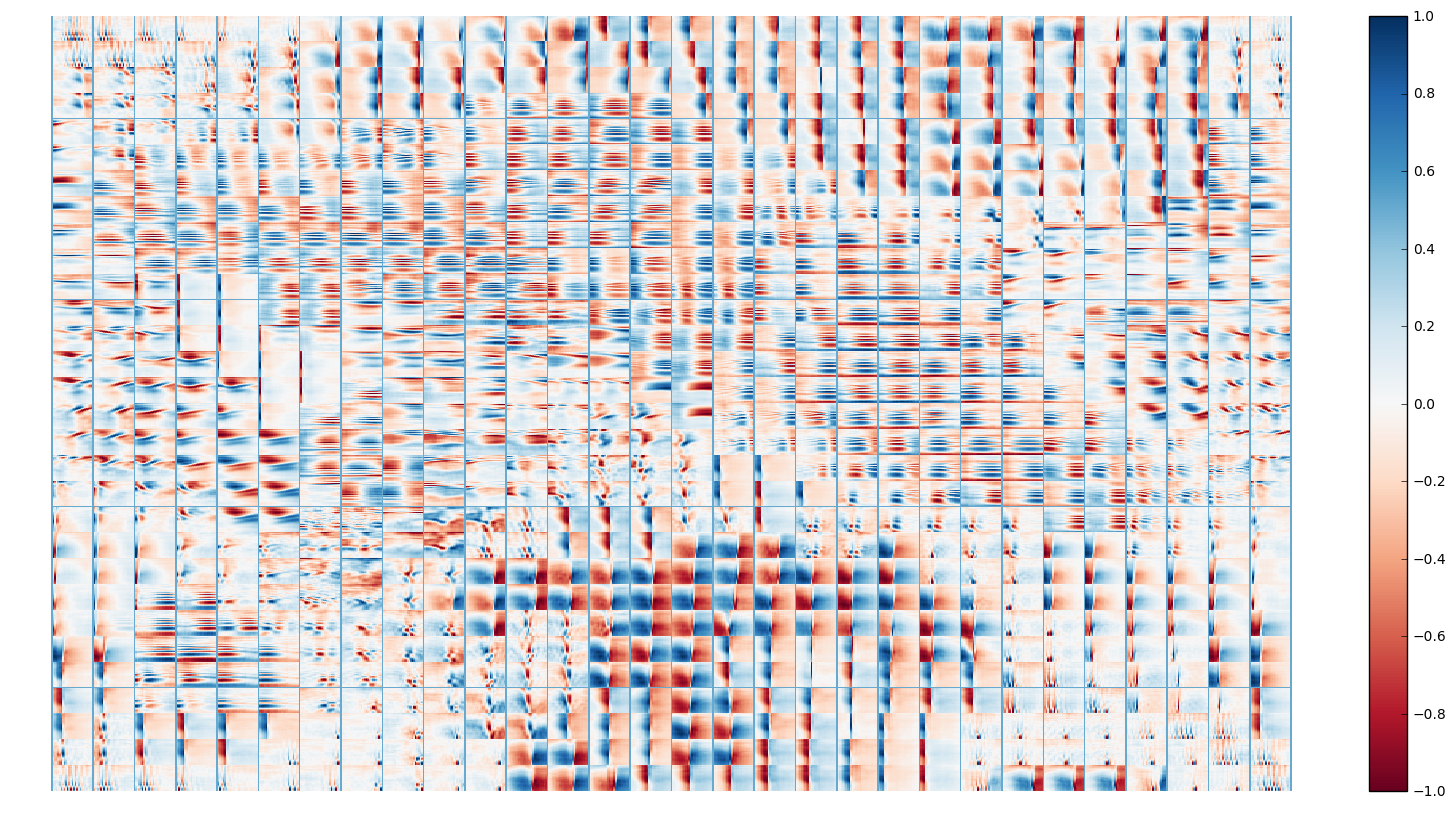

Topographic sparse coding of speech

A Berkeley undergrad named Teppei worked with me to apply topographic ICA to auditory data, an idea suggested by fellow Redwood student Jesse Livezey. I implemented a topographic version of the sparse coding network of Olshausen and Field in TensorFlow, which you can find here. We later discovered something very similar had already been done, but our results look cool. We hoped this model could help us understand how cells in a region of the mammalian early auditory system are organized by the stimuli to which they respond, and maybe it will yet…

A Berkeley undergrad named Teppei worked with me to apply topographic ICA to auditory data, an idea suggested by fellow Redwood student Jesse Livezey. I implemented a topographic version of the sparse coding network of Olshausen and Field in TensorFlow, which you can find here. We later discovered something very similar had already been done, but our results look cool. We hoped this model could help us understand how cells in a region of the mammalian early auditory system are organized by the stimuli to which they respond, and maybe it will yet…

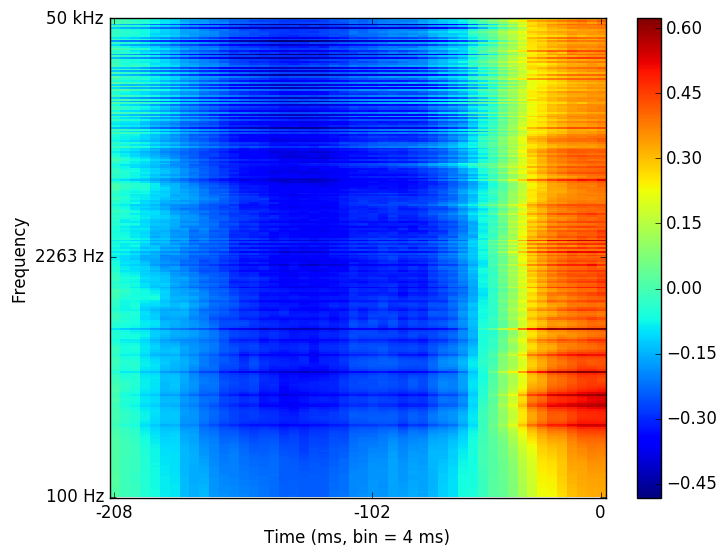

Analysis of single-neuron recordings from rat auditory cortex

When Vanessa, a student in the experimental side of the DeWeese lab, saw no structure in her hard-won single-neuron data, Mike brought his newest theory student (me) in to try some fancier analysis techniques. Sadly, this work yielded to other priorities when we convinced ourselves we weren’t going to find anything. The image here shows a representative “spectro-temporal receptive field.”

When Vanessa, a student in the experimental side of the DeWeese lab, saw no structure in her hard-won single-neuron data, Mike brought his newest theory student (me) in to try some fancier analysis techniques. Sadly, this work yielded to other priorities when we convinced ourselves we weren’t going to find anything. The image here shows a representative “spectro-temporal receptive field.”

Along the way I did learn a lot about techniques for characterizing neural responses to stimuli. I also enjoyed coding a python implementation of Sharpee, Rust, and Bialek’s “Maximally Informative Dimensions” algorithm for finding the linear subspace of the stimulus space with maximal mutual information with the neural spike train.